Supporting Creators’ Sensemaking and Ideation with

LLM-Powered Audience Personas

Abstract

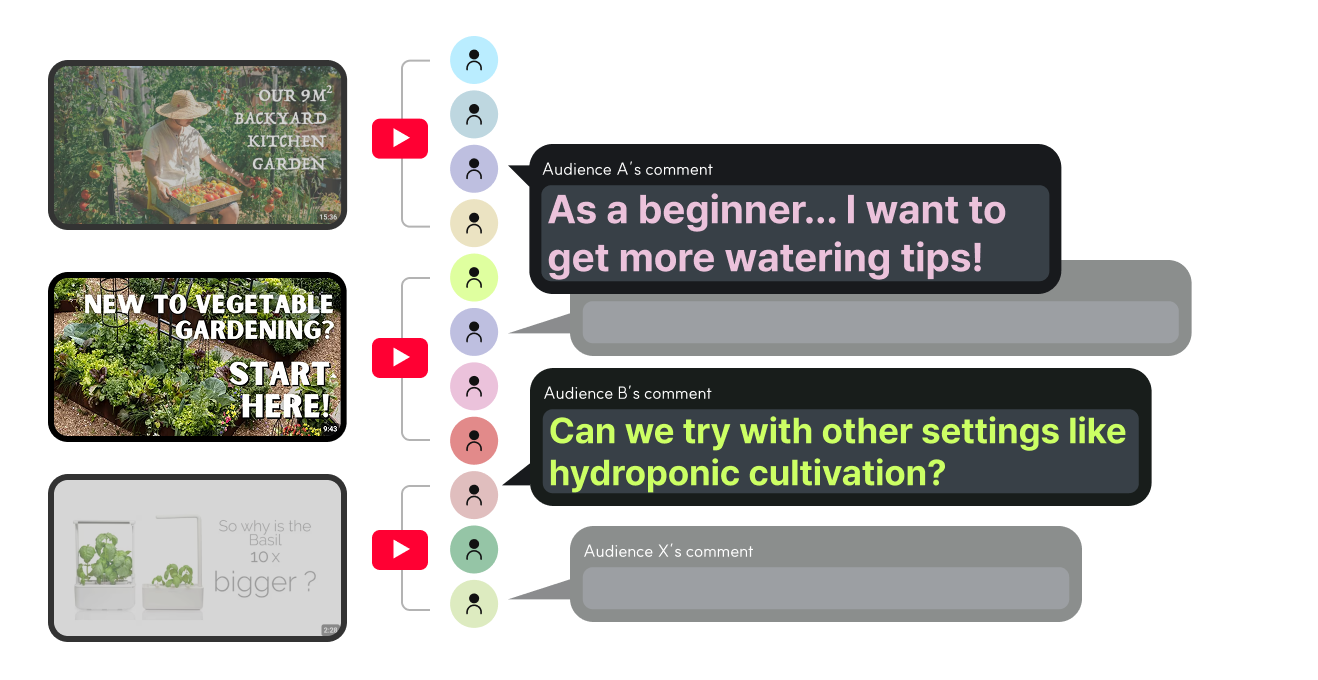

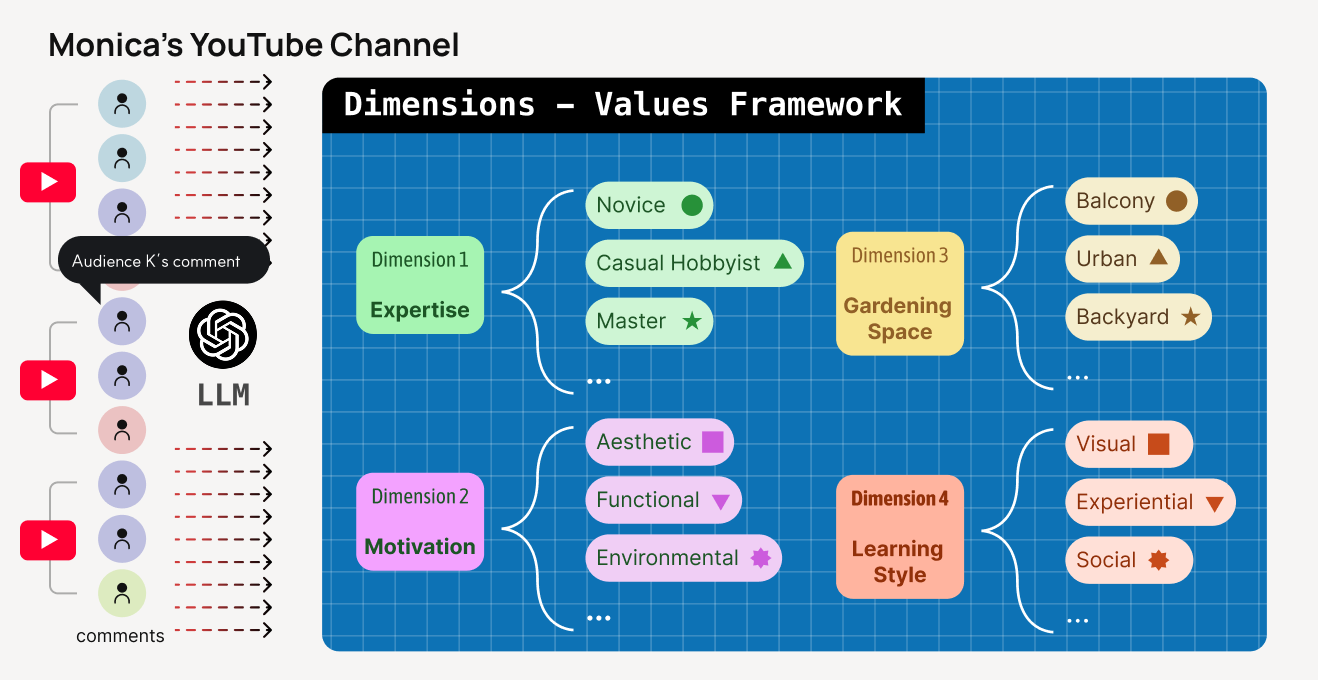

A content creator's success depends on understanding their audience, but existing tools fail to provide

in-depth insights and actionable feedback necessary for effectively targeting their audience. We present

Proxona, an LLM-powered system that transforms static audience comments into interactive, multi-dimensional

personas, allowing creators to engage with them to gain insights, gather simulated feedback, and refine

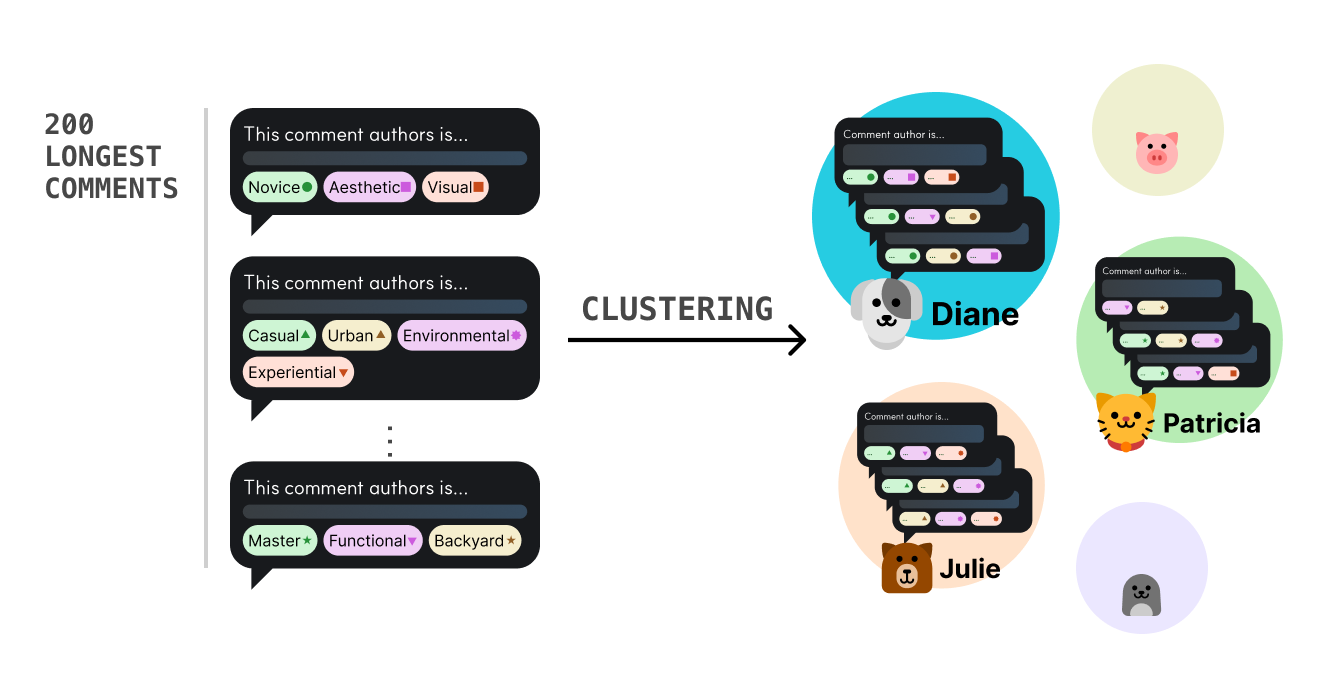

content. Proxona distills audience traits from comments, into dimensions (categories) and values

(attributes), then clusters them into interactive personas representing audience segments. Technical

evaluations show that Proxona generates diverse dimensions and values, enabling the creation of personas

that sufficiently reflect the audience and support data grounded conversation. User evaluation with 11

creators confirmed that Proxona helped creators discover hidden audiences, gain persona-informed insights on

early-stage content, and allowed them to confidently employ strategies when iteratively creating storylines.

Proxona introduces a novel creator-audience interaction framework and fosters a persona-driven, co-creative

process.

Interfaces

Exploration

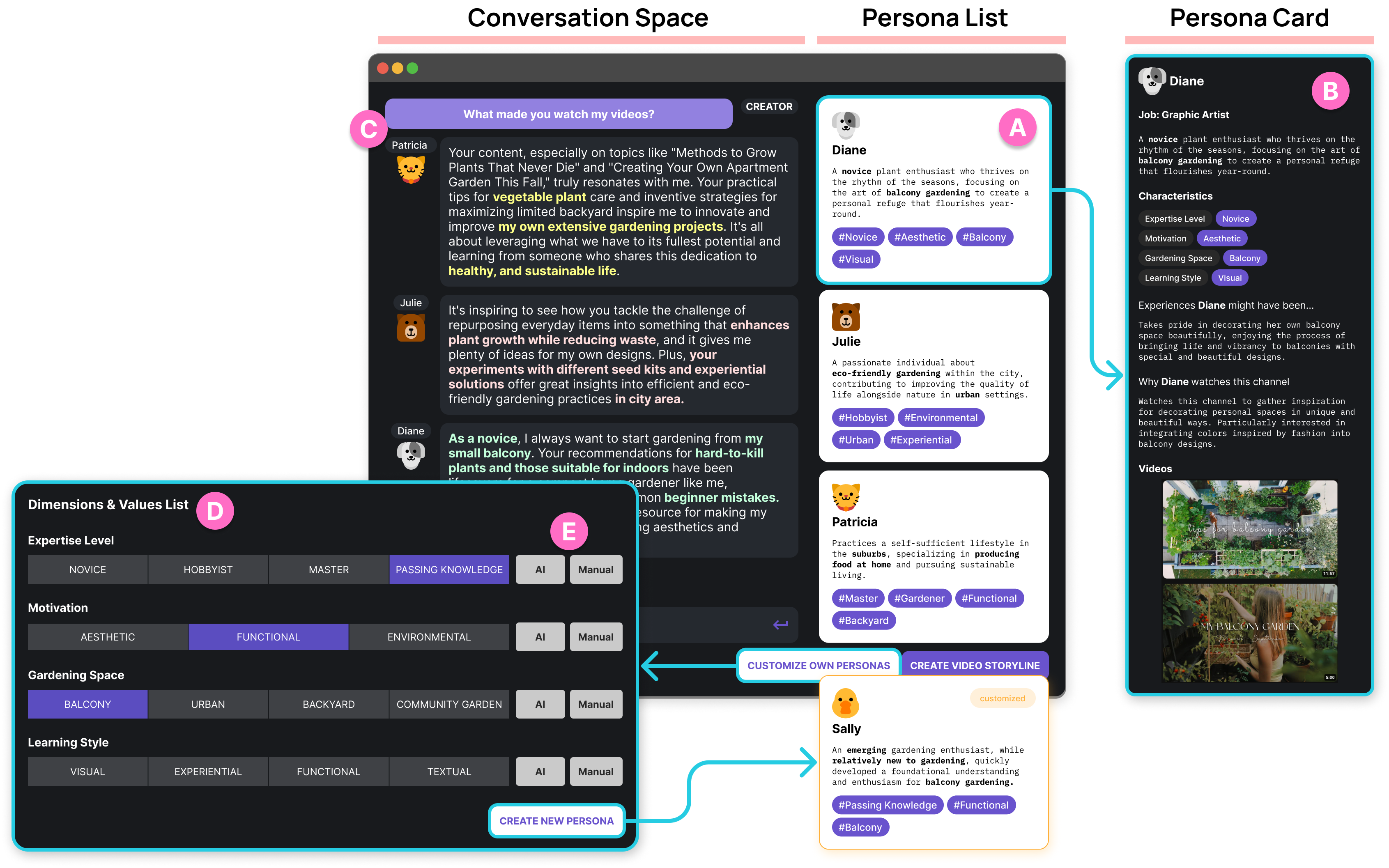

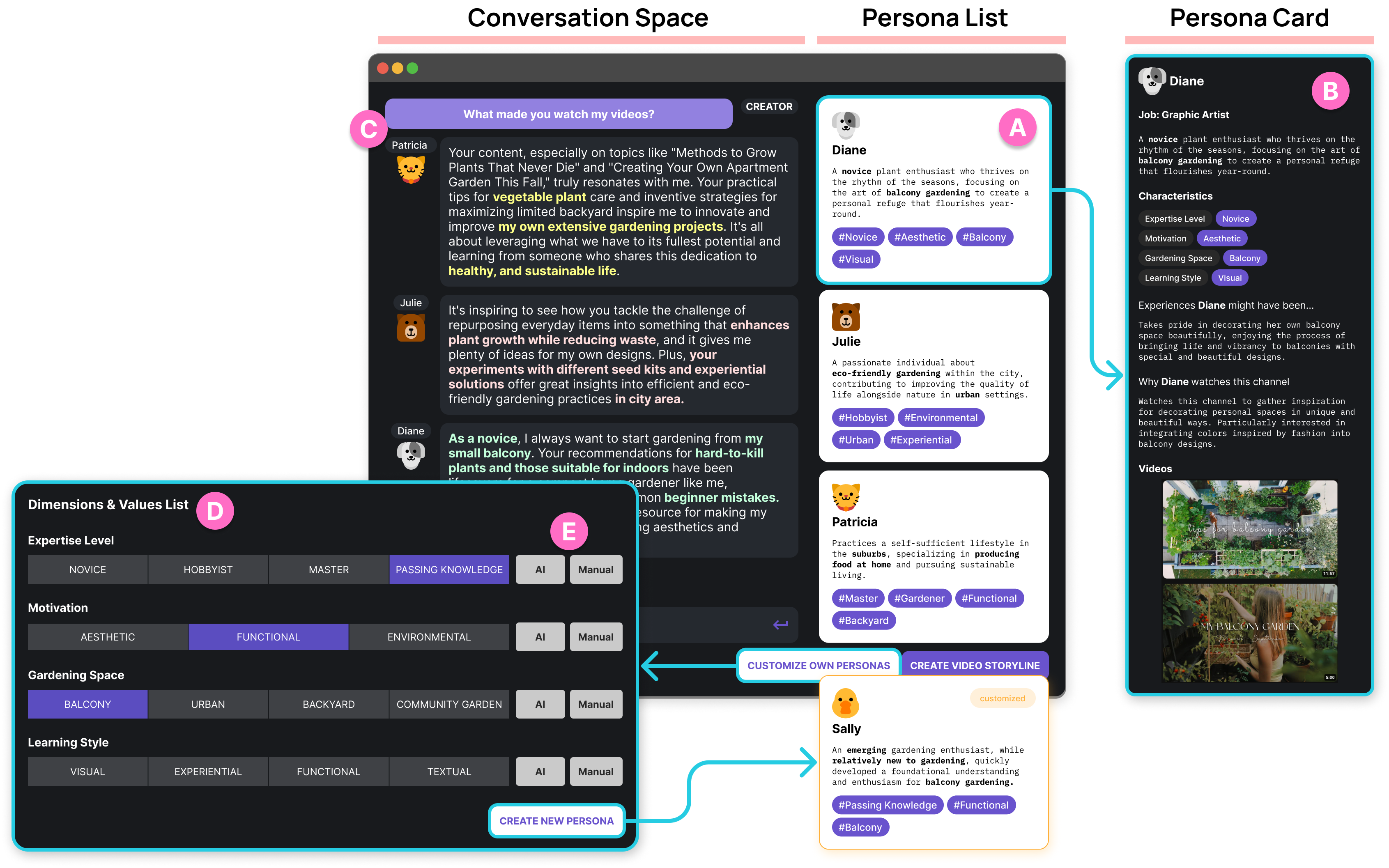

Exploration page where creators can explore and interact with audience personas. (A) Persona list showing multiple

audience personas. (B) Persona cards with details of persona profile. (C) Conversation space to freely chat with personas. Creators

can ask questions to personas and request their opinions. (D) Dimensions and values list showing diverse audience attributes.

(E) Customization options for creating new personas by selecting or generating different dimension-value combinations.

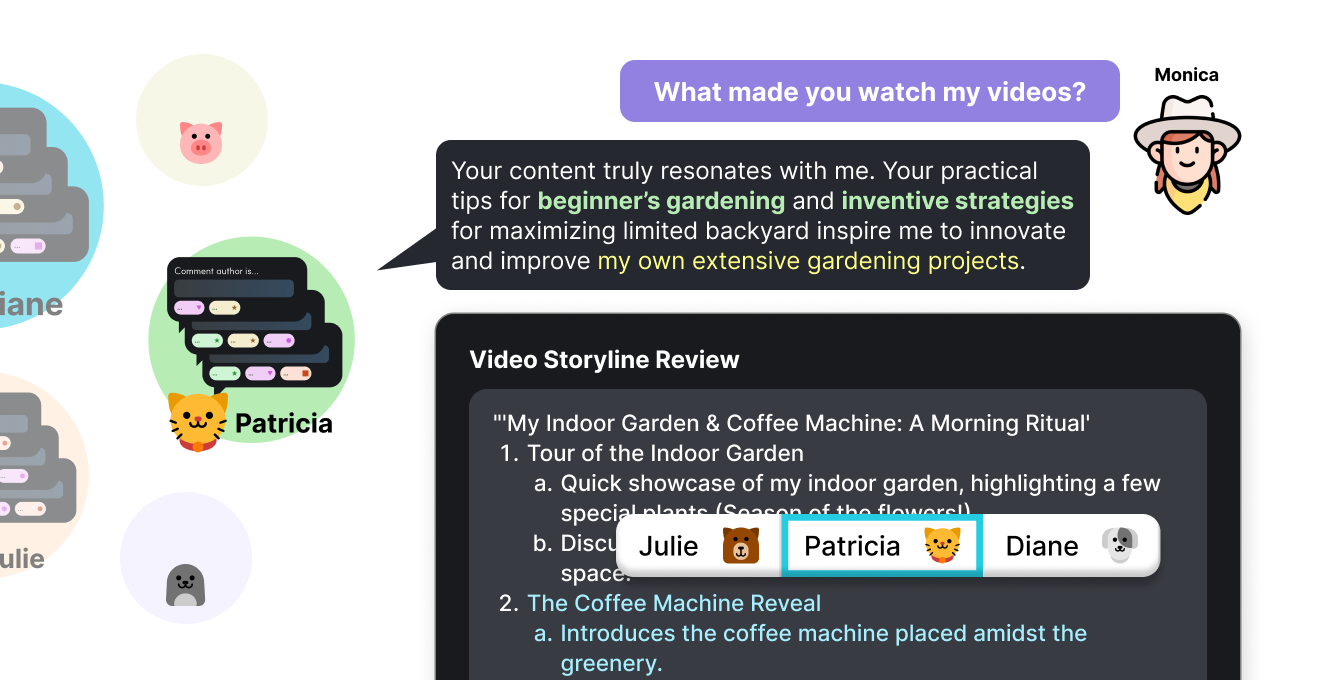

Creation

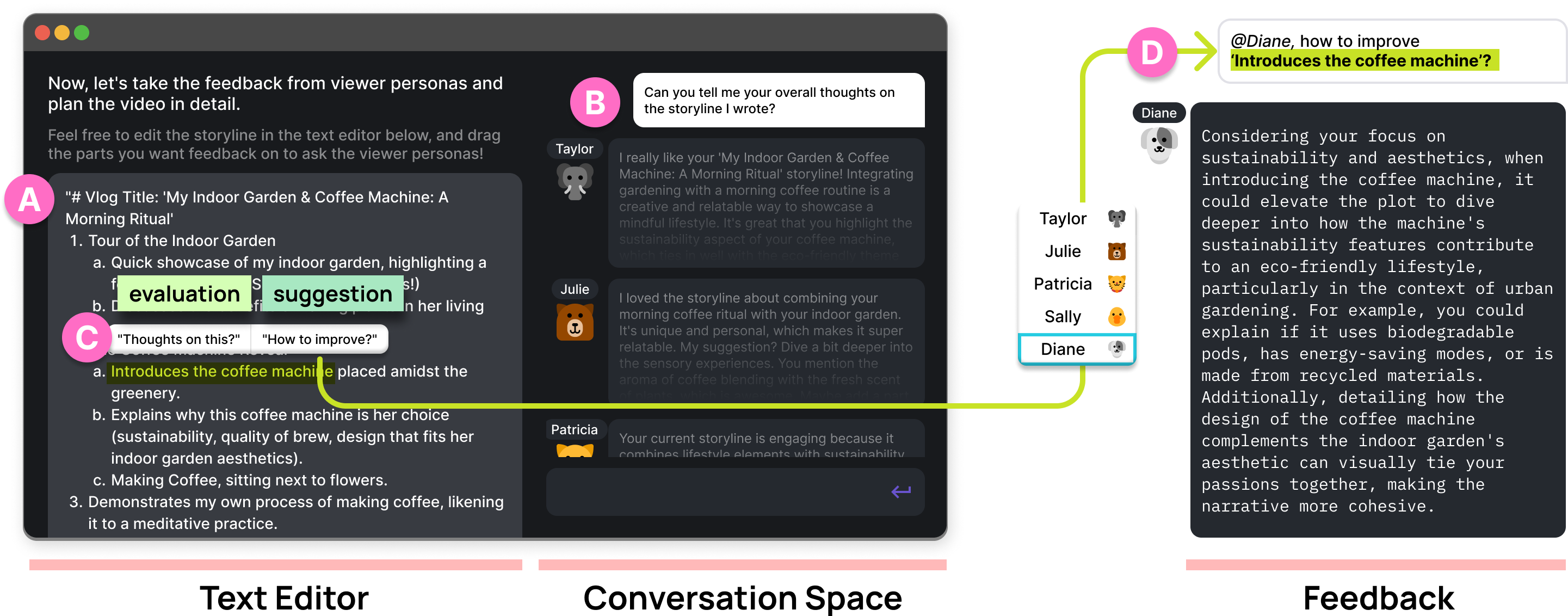

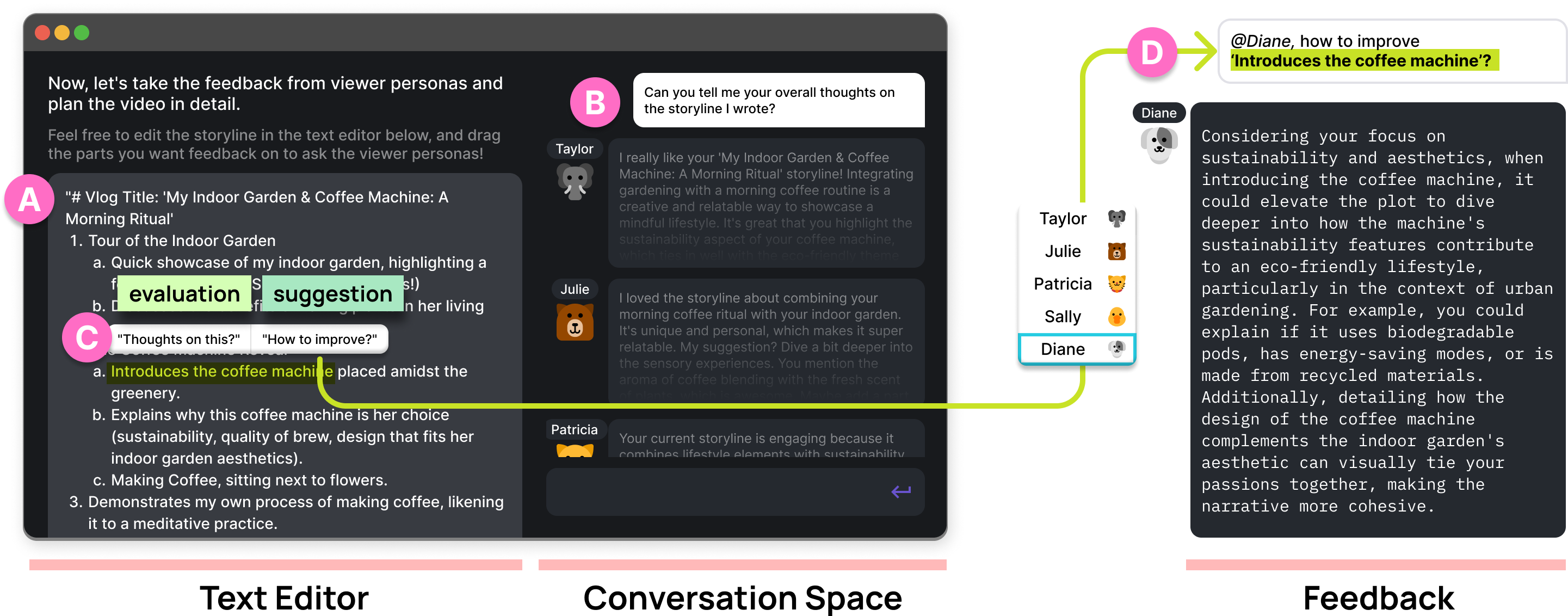

Creation page where creators write a video storyline, proceed with conversations about their plot and request feedback

on their written content. (A) Text editor where creators can draft their video storylines. (B) Conversation space, where creators

ask personas for thoughts on the storyline. (C) Feedback feature allows creators to get [evaluation] or [suggestions] on particular

sections of the text from a particular persona. (D) Example feedback provided by a chosen persona (Diane).

Insights from User Studies

How effectively can Proxona support creators in exploring audience traits for sensemaking and ideation?

Proxona enabled creators to explore nuanced audience traits—such as expertise level, motivation, and content preferences—grounded in real viewer comments.

In a user study with 11 YouTube creators, participants used Proxona to analyze their audience through persona exploration and interactive feedback.

✅ +1.36 improvement in perceived audience understanding

🔍 Helped discover previously overlooked audience segments

💬 Enhanced creators’ ability to make sense of content performance

“It helped me reflect on who I’m really creating for.”

With Proxona, how do creators integrate persona-informed insights into their creative practices?

Beyond audience understanding, Proxona supports creators during the ideation phase by simulating audience feedback on content drafts.

Participants used the system to test early-stage scripts and storyline ideas, receiving targeted insights from different persona types.

💬 Creators received tailored reactions to their script content

🛠️ Many made revisions to structure, tone, or clarity

🎯 Felt like "co-planning" content with the audience

“It was like workshopping my idea with a real viewer.”

Bibtex

@inproceedings{10.1145/3706598.3714034,

author = {Choi, Yoonseo and Kang, Eun Jeong and Choi, Seulgi and Lee, Min Kyung and Kim, Juho},

title = {Proxona: Supporting Creators' Sensemaking and Ideation with LLM-Powered Audience Personas},

year = {2025},

isbn = {9798400713941},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3706598.3714034},

doi = {10.1145/3706598.3714034},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

articleno = {149},

numpages = {32},

keywords = {Large Language Models, Human-AI Interaction, Persona, Agent Simulation, Sensemaking, Ideation, Creative Iterations},

location = {Yokohama, Japan},

series = {CHI '25}

}

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT)

(No. RS-2024-00406715) and by Institute of Information & communications Technology Planning & Evaluation (IITP)

grant funded by the Korea government (MSIT) (No. RS-2024-00443251, Accurate and Safe Multimodal, Multilingual

Personalized AI Tutors). We also acknowledge support from the Office of Naval Research (ONR: N00014-24-1-2290).

Additionally, this work was supported by the National Science Foundation (USA) (DGE-2125858) and

Good Systems, a UT Austin Grand Challenge for developing responsible AI technologies.